How to use AWS Bedrock to access foundational AI models

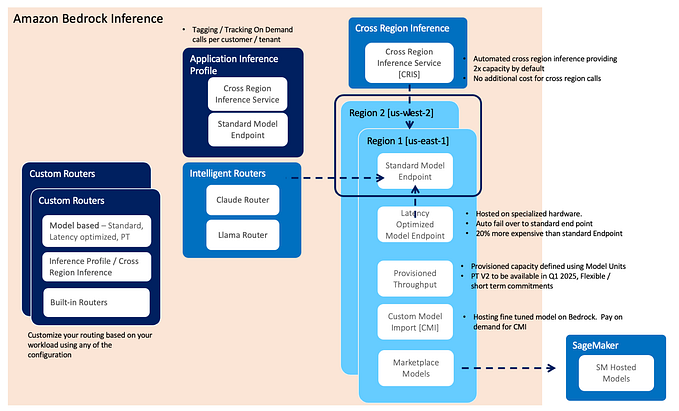

AWS Bedrock is a fully managed service from AWS that provides a unified access point for popular foundational models from leading AI companies. These models include Llama, Titan, Claude, Stable Diffusion, Mistral, Command, and Jamba 1.5. Another big plus is that they offer on demand pricing which helps make access to the models affordable without managing infrastructure.

To get started go to the AWS console and select AWS Bedrock then select the model you would wish to enable.

Select a foundational model e.g Llama then click on Request model access

Click on the modify model access button to select the models to enable.

Click on the checkboxes for the models you would wish to have access the and click on next.

Review the models you've enabled and click on submit to accept the EULA.

To access the models programmatically using Python install the AWS Python SDK using the following command

pip install boto3

Ensure you have obtained the credentials to access the AWS SDK programmatically which includes the Access Secret Key and Access Secret ID. In addition, obtain the model ID of the model you wish to prompt that is used in the initialization of AWS Bedrock here. You're all set to go now you can run the code example below.

import json

import boto3

from prompt_toolkit import prompt

# Set up AWS credentials explicitly

aws_access_key_id = 'AWS_ACCESS_KEY_ID' # set access key id

aws_secret_access_key = 'AWS_SECRET_ACCESS_KEY' # set acces key secret

aws_region = 'us-east-1' # Adjust to your AWS region

# Create Bedrock client

bedrock = boto3.client(

'bedrock-runtime',

region_name=aws_region,

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key

)

try:

prompt = ('What is the capital city of America?')

# Model invocation

response = bedrock.invoke_model(

modelId='MODEL_ID', # Replace with the correct model ID

body=json.dumps({

"prompt": prompt,

"max_gen_len":2048, # Specify the maximum number of tokens to use in the generated response

"temperature": 0.7, # Use a lower value to decrease randomness in the response. MAX VALUE IS 1.0

"top_p": 0.5 # Use a lower value to ignore less probable options. Set to 0 or 1.0 to disable.

}),

accept='application/json',

contentType='application/json'

)

# Read and print the response

response_body = response['body'].read().decode('utf-8')

print(response_body)

parsed = json.loads(response_body)

print("Response from the model:", parsed["generation"])

except Exception as e:

print("An error occurred:", e)

For more in-depth information refer to the official documentation here. Happy Coding!